-

Excellent description of configuring OBS et al for streaming code/development.

-

"That demo got the attention of venture capitalists. And when a cool-looking magical thing gets the attention of venture capitalists, discourse tends to spiral out of control." Good, even-handed look at GPT3. It's both impressive and unexciting for me – there are so many underlying issues besides the 'magic', not to mention the relative failure rate, the complexity of any real-world deployment, and as ever, a lack of nuance in a lot of media about discussing text-generation. This lays out some of the points with the latter well.

-

"Marky Markov is an experiment in Markov Chain generation implemented in Ruby. It can be used both from the command-line and as a library within your code." It's very fast, and basically does all the work I've been doing on my projects by hand for me. But better.

Markov Chocolates: A New Diversion

17 January 2012

A new year, and a new toy to begin it.

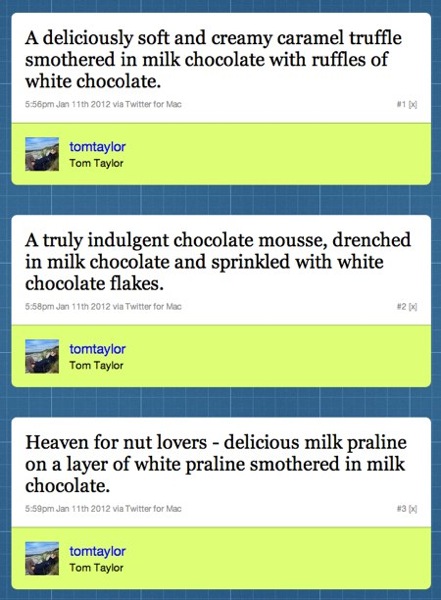

This all began when Tom started tweeting the prose from the back of a chocolate box.

One look at that and, having gagged a little on the truly purple prose, there was only one obvious continuation: a machine to churn out chocolate descriptions infinitely.

Which was as good a time as any to play with Markov chains. Wikipedia will explain in more detail, but if you’ve never encountered them, a very rough explanation is: Markov chains are systems that model what the next item in a list will be based on the previous ones. The more previous items you have, the better it can predict the next thing.

They’re often used in toy text generators. You give them source text to seed them, randomly pick a word from the source text, and then start choosing what should come next. What’s nice about this is with nothing other than a piece of maths, and a tight corpus, we can produce things that usually read like English without having to teach a computer something as complex as grammar. Of course, sometimes you get grammatical-yet-nonsensical English out, but that’s hardly in a problem in our case.

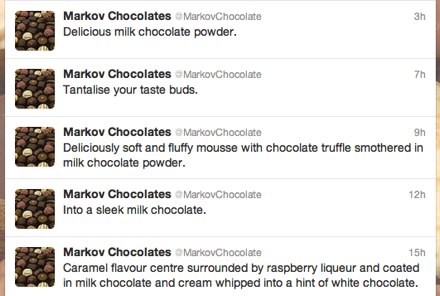

So I took the full prose from the back of Tom’s chocolates (Thornton’s Premium selection, for reference), some Markov text-generation code from an illuminating installment of Rubyquiz, and fiddled for a bit.

A short piece of work later and I had Markov Chocolates.

Roughly once every four hours (but it varies), you’ll get a fresh, tasty new Markov Chocolate in your Twitter feed. It’s another of my daft toys, but it still makes me chuckle. I’m thinking of expanding the corpus soon, and I hear the Markov coroporation are keen to branch out into new product lines. For now, you can get your chocolate fix here.